How TabPFN is Transforming Financial Risk Management

At Prior Labs, we're witnessing something remarkable: the emergence of "tabular foundation modelsLLMs" that bring the pre-training revolution of LLMs to structured data. Our TabPFN (Tabular Prior-Data Fitted Networks) model, recently published in Nature, represents a fundamental shift in how organizations approach machine learning on tabular data.

Through our partnership with Taktile, a leading decision intelligence platform, we're seeing TabPFN deliver exceptional results in real-world financial applications, and the implications extend far beyond what we initially imagined.

The Pre-Training Revolution Comes to Tabular Data

The success of large language models like GPT has been built on a simple but powerful idea: pre-train a model on vast amounts of data, then adapt it to specific tasks without retraining. Until recently, this approach seemed limited to unstructured data like text and images.

TabPFN changes that. Our model brings pre-training to tabular data by learning from millions of synthetic datasets during training, then applying that knowledge to real-world datasets through in-context learning. When you make a prediction with TabPFN, you provide labeled rows of your data alongside unlabeled rows, and the model completes the unlabeled rows with predictions, similar to how LLMs use examples in prompts.

.avif)

Remarkably, there's no model training in the traditional sense. TabPFN's parameters stay fixed throughout, relying instead on the transformer architecture's flexibility to learn patterns in your data while making predictions.

Why TabPFN Excels for Risk Practitioners

Our case-study with Taktile has revealed why TabPFN is particularly powerful for decision intelligence applications:

- Small Data Excellence: TabPFN performs exceptionally well in small-data regimes, which is invaluable for fraud, credit, and anti-money laundering applications where many companies have relatively few true positives.

- Zero Training Infrastructure: Teams don't need complex retraining and MLOps infrastructure. No retraining means minimizing overhead from model validation, testing, and signoff processes.

- Domain Expert Empowerment: Domain experts can now create competitive model predictions without years of data science experience, enabling smaller, faster, and more empowered teams.

TabPFN also includes built-in features that eliminate common ML friction points:

- Native Distribution Output: Returns distributions for results that can be used in downstream decisions

- Missing Value Handling: Native handling of missing values and outliers without imputation strategies

- Built-in Explainability: Returns explanations using Shapley Values, addressing black box AI concerns

Real-World Applications Through Taktile

Our collaboration with Taktile demonstrates TabPFN's practical impact across financial services use cases.

1. Fraud Checks

%20copy.avif)

We benchmarked TabPFN's performance on an anonymized, real-world account opening fraud dataset, comparing it to standard boosted trees approaches. The results showcase TabPFN's power: the more sparse the data becomes, the larger TabPFN's outperformance over classical machine learning approaches.

Most remarkably, TabPFN performs surprisingly well even with minimal training data, demonstrating the power of pre-training in action. This capability is particularly valuable for fraud detection scenarios where labeled examples are inherently limited.

2. Credit risk anomaly detection

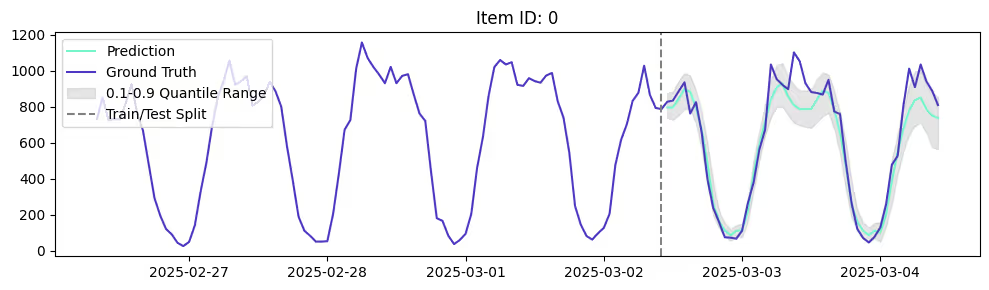

Another exciting application is anomaly detection for credit risk management. TabPFN ranked #1 in time series settings on industry benchmarks, even outperforming Amazon's Chronos model. This makes it valuable for forecasting approval rates and monitoring key metrics like application volumes and FICO score distributions.

When observed metrics drift too far from expected distributions, teams can raise alerts for analysts to investigate potential anomalies, enabling proactive rather than reactive risk management.

The Technical Innovation

TabPFN's success stems from several key innovations in our approach to pre-training for tabular data. We developed a novel synthetic data generation process that creates millions of tabular datasets with varying statistical properties, column types, and relationship structures.

Our transformer architecture has been specifically adapted for tabular data, with custom mechanisms that handle mixed data types (numerical, categorical, and missing values) within the same model. This eliminates the preprocessing complexity that typically plagues tabular ML pipelines.

The in-context learning approach means TabPFN adapts to new datasets and prediction tasks without parameter updates, similar to how large language models handle few-shot learning tasks.

We're transparent about current limitations: TabPFN has relatively high inference latency and limitations on supported data size. However, we expect both limitations to improve rapidly throughout 2025 as we continue developing the technology.

These limitations don't diminish TabPFN's current value for most decision intelligence use cases, where decision quality often matters more than millisecond-level latency, and datasets frequently fall within our current capabilities.

Looking Forward: The Future of Tabular AI

Through our work with Taktile and other partners, we're seeing TabPFN catalyze a broader transformation in how organizations approach tabular machine learning. The pre-training paradigm is enabling faster deployment, better performance on small datasets, and democratized access to advanced AI capabilities.

As we continue expanding TabPFN's capabilities, we're excited about the possibilities ahead. The combination of pre-trained models and decision intelligence platforms like Taktile is creating new opportunities for organizations to leverage AI in ways that were previously impractical or impossible.

Our Nature publication was just the beginning. The real impact comes from seeing TabPFN solve real-world problems in production environments.

Ready to explore how TabPFN can transform your approach to tabular machine learning? We're eager to discuss how our pre-trained models can deliver superior results with unprecedented simplicity for your specific use cases.