Extensions

TabPFN was built to deliver fast, accurate predictions out of the box—with minimal preprocessing and no hyperparameter tuning. But we’ve always known that the off-the-shelf experience is the starting point. If you’ve ever thought, “TabPFN is close, but I need X…” — keep reading.

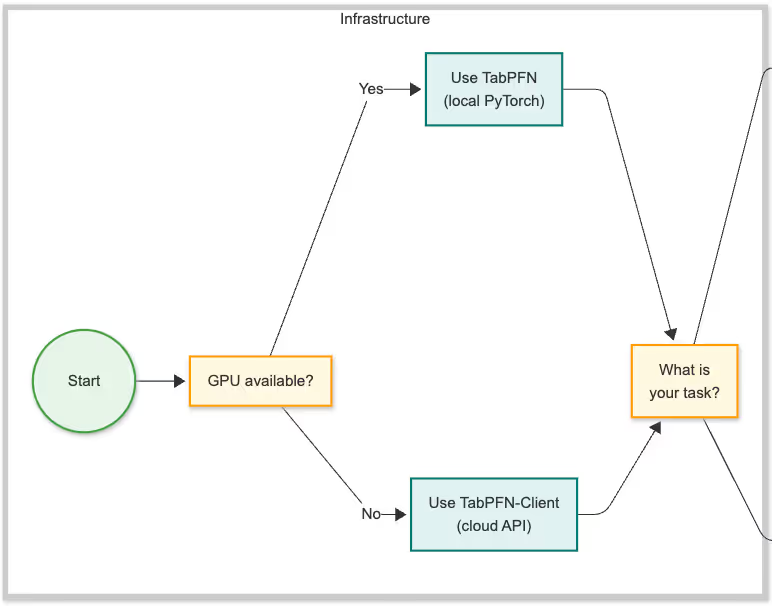

Thanks to your feedback, use-cases, and contributions, the TabPFN Extensions package has grown into a powerful layer - we spend significant internal development time expanding the model’s reach and enabling new workflows. Shoutout to Klemens Flöge for putting together this flowchart to help you navigate what’s available.

We’re reintroducing the extensions today with a visual guide to help you discover everything you can now do with TabPFN.

Using TabPFN without a GPU?

Start with our API Client. The extensions below work both with the API and the local package, so GPU constraints don’t limit your options. The API also gives you access to the latest TabPFN models—including support for text features—through a simple interface.

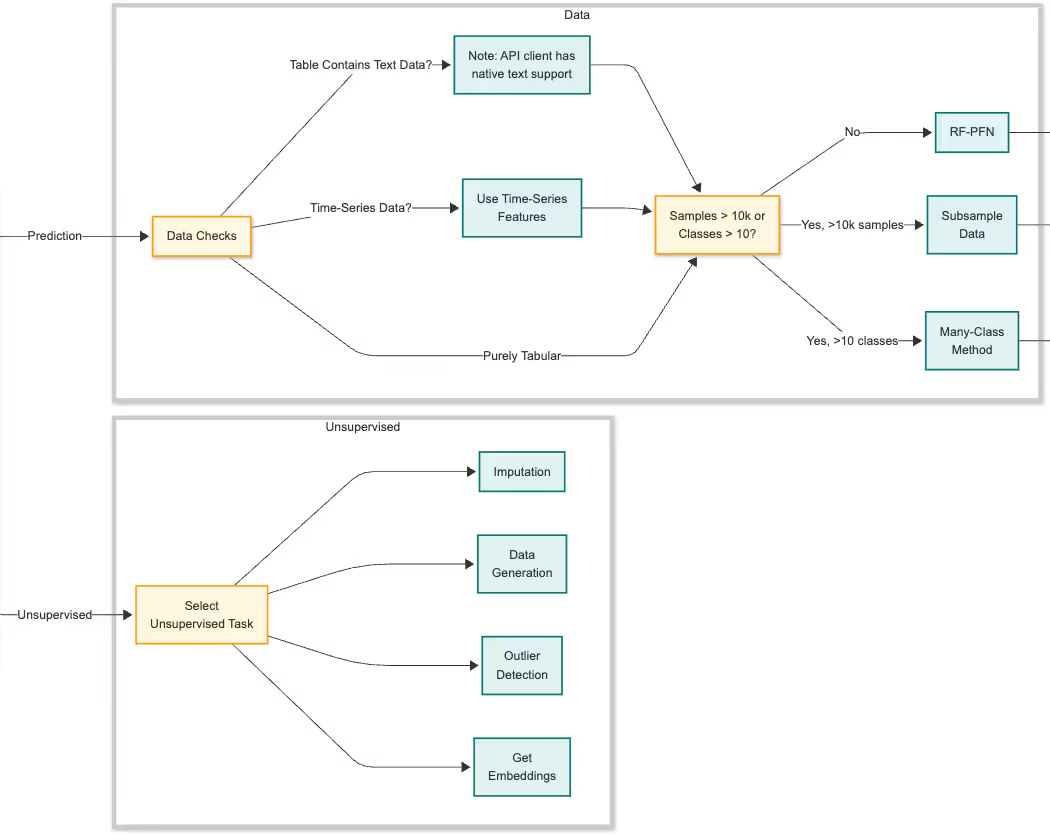

What kind of task are you solving?

TabPFN isn’t just for prediction—it also works for a range of unsupervised learning tasks, including:

- Imputation: fill missing values using generative modeling

- Data Generation: sample realistic synthetic tabular data

- Density Estimation / Outlier Detection

- Embeddings: extract meaningful sample representations for downstream use

If you’re working on a prediction problem, the flowchart walks you through key decision points—based on data modality, scale, and complexity:

- Working with text? Use the API, which natively supports mixed-modality inputs

- Time-series data? Try TabPFN-Time-Series, with built-in temporal feature pipelines

- >10,000 samples? Use our subsampling extension for scalable performance

- >10 classes? Use the Many Class classifier Otherwise: RF-PFN is a random forest based classifier with a TabPFN in each of the leaves

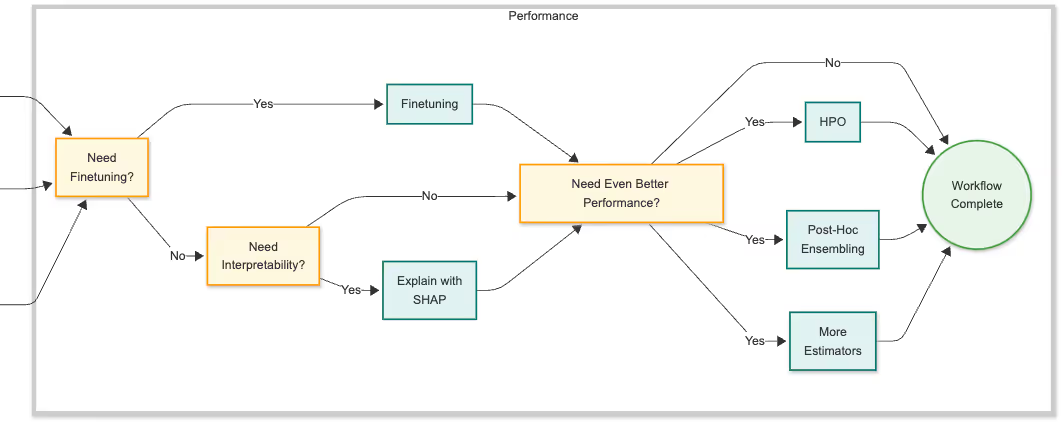

Want to go further?

The extensions also include modules to boost performance and interpret results. Get in touch if you’d like to go deeper into any of these:

- Finetuning: train the TabPFN model on your own dataset to increase accuracy for specific tasks.

- Interpretability: use Shapley values to understand how input features influence predictions

- Performance Enhancement through Hyperparameter Optimization (HPO)

- Post-Hoc Ensembles using AutoTabPFN

You can access the image in the Github here. Every node in the flowchart links to the relevant repos.

We're sharing this not just as a guide—but as an invitation to explore what’s already possible. We’ll have more posts coming on different areas shortly.

We’ll keep building.